网站首页 文章专栏 02测试用例.md

02测试用例.md

web & 无NodePort

创建配置文件

cat >tomcat_deploy.yaml<<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: mytomcat

spec:

replicas: 1

selector:

matchLabels:

app: mytomcat

template:

metadata:

name: mytomcat

labels:

app: mytomcat

spec:

containers:

- name: mytomcat

image: tomcat:8

ports:

- containerPort: 8080

EOF

应用配置文件

kubectl apply -f tomcat_deploy.yaml

获取pod地址

kubectl get pods

通过pod地址访问

curl pod ip:pod port

tensorflow & NodePort

创建deployment配置文件

cat >tensorflow_deploy.yaml<<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: tensorflow

spec:

replicas: 1

selector:

matchLabels:

app: tensorflow

template:

metadata:

name: tensorflow

labels:

app: tensorflow

spec:

containers:

- name: tensorflow

imagePullPolicy: IfNotPresent

image: tensorflow1_18:v2

ports:

- containerPort: 6081

EOF

创建service配置文件

cat >tensorflow_service_nodeport.yaml<<EOF

apiVersion: v1

kind: Service

metadata:

name: front-tensorflow

labels:

app: tensorflow

spec:

ports:

- port: 6081

targetPort: 6081

nodePort: 30000

selector:

app: tensorflow

type: NodePort

EOF

应用配置文件

kubectl apply -f tensorflow_deploy.yaml

kubectl apply -f tensorflow_service_nodeport.yaml

使用浏览器访问应用,打开NodeIp:NodePort页面

tensorflow & ingress

见第二章,《安装ingress》

指定node部署

先给node打标签

kubectl label nodes dsai slave=136

cat >tomcat_deploy_node.yaml<<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: mytomcat

spec:

replicas: 1

selector:

matchLabels:

app: mytomcat

template:

metadata:

name: mytomcat

labels:

app: mytomcat

spec:

containers:

- name: mytomcat

image: tomcat:8

ports:

- containerPort: 8080

nodeSelector:

slave: "136"

EOF

应用配置文件

kubectl apply -f tomcat_deploy_node.yaml

GPU调度(隔离模式)

cat >deepo_deploy_node.yaml<<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: deepo

spec:

replicas: 1

selector:

matchLabels:

app: deepo

template:

metadata:

name: deepo

labels:

app: deepo

spec:

containers:

- name: deepo

imagePullPolicy: IfNotPresent

image: wenfengand/deepo:testd

resources:

limits:

nvidia.com/gpu: 1 # 必须为整数

nodeSelector:

slave: "136"

EOF

kubectl apply -f deepo_deploy_node.yaml

运行kubectl get pods找到pod名称,与container进行交互

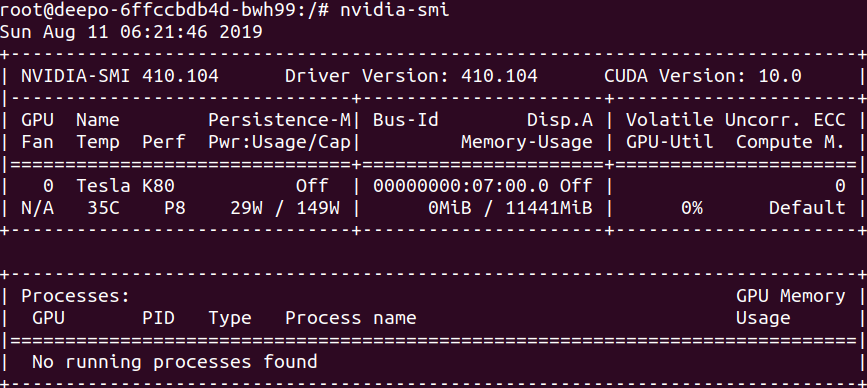

kubectl exec -it deepo-6ffccbdb4d-bwh99 /bin/bash

运行nvidia-smi查看GPU数量

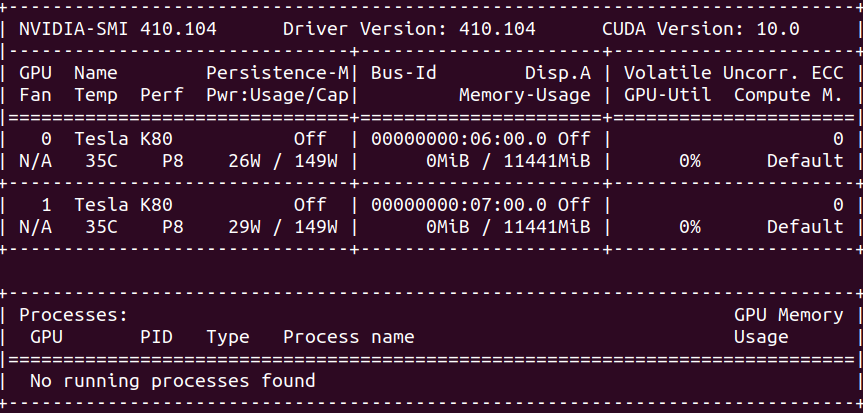

在gpu node节点查看所有GPU数量

可以看出在pods中gpu数量为1块,而slave节点中gpu数量为2块,k8s完成gpu调度。

GPU调度(共享模式)

cat >deepo_deploy_node_share.yaml<<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: deepo

spec:

replicas: 1

selector:

matchLabels:

app: deepo

template:

metadata:

name: deepo

labels:

app: deepo

spec:

containers:

- name: deepo

imagePullPolicy: IfNotPresent

image: wenfengand/deepo:testd

nodeSelector:

slave: "136"

EOF

kubectl apply -f deepo_deploy_node_share.yaml

使用与隔离模式时相同的命令,可知容器内可见的GPU数量为2,与node中的GPU数量相同。

参考

分类导航

随笔

深度学习

报错解决

效率工具

centos

算法题目

数据结构

未分类

python

网站

书评

docker

书单

2019书单

机器学习

linux

python高阶教程

latex

linux设备

嵌入式

信号与信息处理

影评

django

科普

爬虫

自然语言处理

vue

运维

系统优化

数据库

git

视频

短视频

Go

html

tensorflow

云加社区

单片机

驾考

kvm

gnuradio

markdown

matlab

博客

mysql

nextcloud

vpn

pandoc

pyqt5

vscode

云服务

网络代理

cmake

javascript

vim

网站日常

热门文章